Exclusive: San Francisco's Mind-Reading Start-up House

Alljoined wants to find your emotional tokens

I could do the work of putting San Francisco in the dateline of this story, but, let’s be honest, this hardware house could not exist anywhere else.

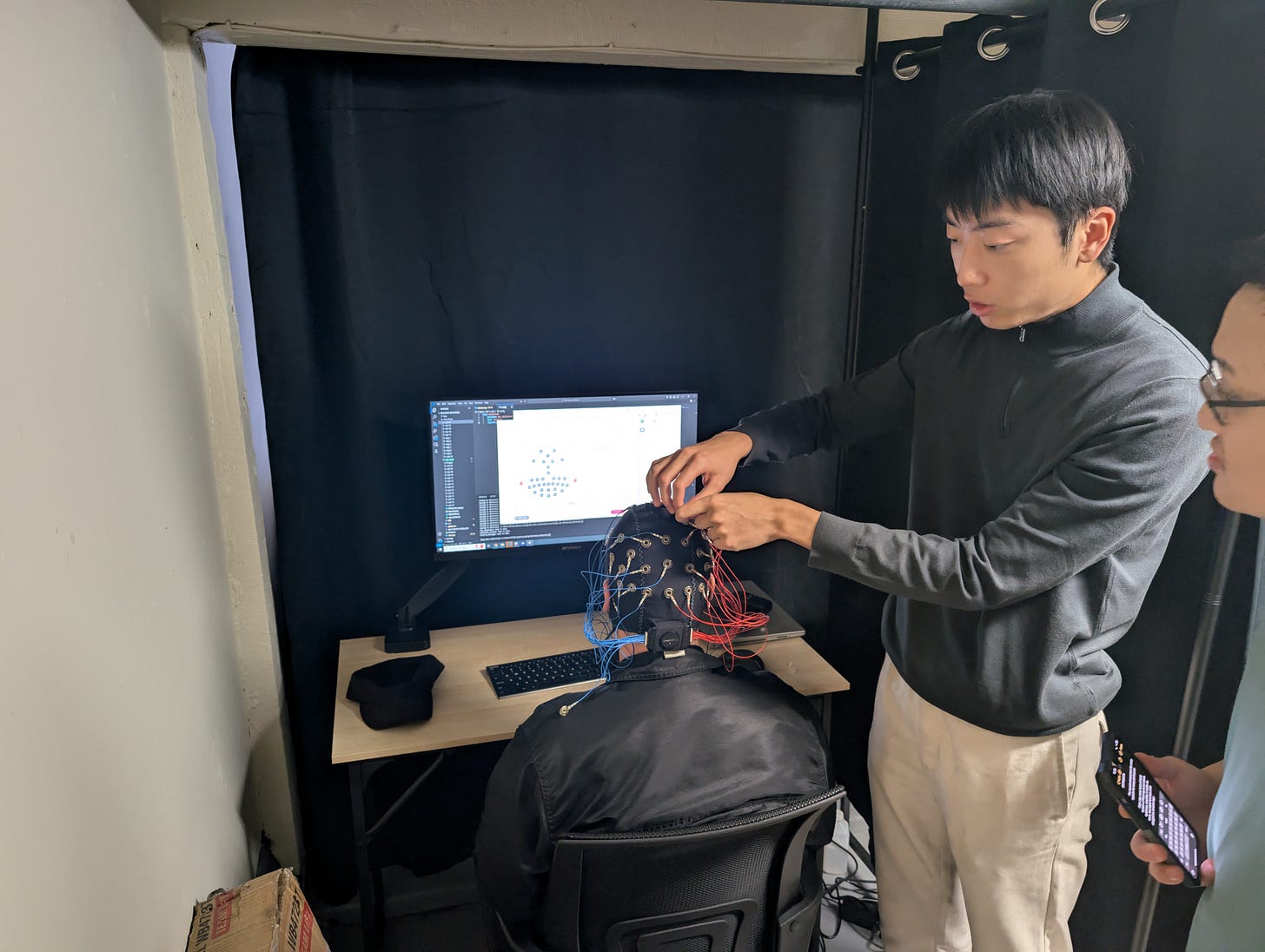

It was mid-February, and I’d turned up at an address in the Inner Richmond. Jonathan Xu, all of twenty-four years old, opened the door and greeted me with a smile. A few moments later, we were both in the basement where a children’s music teacher named Mathew had an EEG (Electroencephalogram) device strapped to his head so that Xu could try and read his mind.

Thanks to Neuralink, a host of other start-ups and headline writers trying to seduce SEO bots, you’ve likely read a lot about mind-reading technology over the past few years. We’re in the midst of a bull market when it comes to brain-computer interface (BCI) systems and attempts to place electrodes very close to neurons so that we can read out the electrical patterns they produce when people think. To that extent, Xu and his company Alljoined are nothing new.

The well-funded BCI start-ups tend toward implanting electrodes in the brain or on top of the brain’s cortical surface. This all makes sense because you get better signal and hence better data the closer you are to the neuron when it fires. Of course, getting really close to neurons comes with heavy costs like brain surgery and foreign objects touching brain tissue, which can cause damage and infections.

For decades, researchers have used EEG devices in a bid to extract brain data via less severe methods than surgery. These products place electrodes all across the scalp to pick up electrical activity from firing neurons. You can see different parts of the brain light up when people look at objects, talk or emote.

While EEG is non-invasive, affordable, and easy to set up, the data it collects is far from perfect. Signals are distorted by layers of brain tissue, the skull, and even hair, and they’re prone to interference from external noise, movement, and inconsistent contact with the scalp. Because of these limitations, scientists have historically been skeptical of EEG’s ability to provide more than a rough, low-resolution snapshot of brain activity.

Alljoined’s CEO Xu, though, contends that advances in artificial intelligence technology have now made it possible to find patterns and insights in EEG data that researchers have previously missed. Alljoined’s thesis is that the data might be good enough to categorize the neuronal patterns that occur when someone thinks of a particular object like a ball or a certain word like, er, ball. Or, as Xu put it in AI lingo,

Our whole intuition is to take transformers and large data sets and decode all the stuff that we thought was not possible from EEG for the first time. It's really thanks to being able to pay attention to every time point and every electrical position. Because of that we're able to decode images now and, in the future, emotion and inner monologues.

There are reasons to think Xu is onto something. He received a degree in computer science from the ever-impressive University of Waterloo and contributed to the MindEye2 paper (PDF) that came out last year. That paper showed AI models pulling incredible new insights out of fMRI (functional magnetic resonance imaging) data and doing so based on relatively few sessions with relatively few patients. People would be shown images on a screen in quick succession – a bus, a group of baseball players, flowers in a vase, a cat (of course) - and the AIs could peruse the brain data and deduce what the people had been looking at with remarkable accuracy.

Xu did not want to rely on expensive fMRI machines and so began doing his own experiments with an Emotiv EEG device that anyone can buy online for $2,299. He first began performing experiments in the same communal hacker house where we found HudZah building a nuclear fusor. Later, he moved to the Inner Richmond and set up Alljoined with backing from Khosla Ventures, Jeff Dean and others.

The new house is wonderful.

Down in the basement/garage, Xu and Alljoined’s chief technology officer Reese Kneeland have set up a makeshift laboratory. There’s a homemade GPU cluster on the floor next to the water heater that powers the AI models. There’s a gym where a car might normally be parked. And in the basement’s main room there are two trial stations where participants sit in front of laptops while flanked by black screens to keep them isolated from each other and their surroundings. Just behind the trial stations, there’s a bedroom with a mattress on the floor.

Xu finds the trial participants online, and they’re offered some money to come to the house and look at images for a couple of hours. He and his coworkers place each participant in a chair and then fit the Emotiv cap on their heads. Some brain lube is injected into ports on the Emotiv device to improve the connection between the electrodes and the skull. Then it’s up to the participant to watch images flash in front of their face as the data is recorded and later fed into the GPU cluster.

(Alljoined made their upstairs bathroom as spa-like as possible to give the participants a pleasant place to wash the brain goo out of their hair. A nice touch.)

Things have been going well for Alljoined so far. “We've demonstrated that by doubling the training set size we get around 75 percent of the way to lab grade hardware,” Xu said. “We're working with $2,000 hardware, and then we just doubled the training set size and we're getting 75 percent of the reconstruction accuracy as $100,000 hardware. Now we're just going to push that more and see when we meet it and when do we surpass it.”

Alljoined has participants cycling through the house in back-to-back sessions and has now amassed the world’s largest dataset for this type of EEG work.

There’s, of course, much left to do. Alljoined’s goal is not just to read out images from brain data but to get to things like emotions and thoughts. Eventually such data would be gathered from a consumer-style device that works in noisy, regular life conditions. At this time, Xu has no idea if the technology or his team’s engineering skills can or will make these things possible.1

Once anyone from brain implant land starts talking about yanking emotions and whole thoughts from skulls, we enter more uncomfortable territory. People in the BCI arena tend to talk about these ideas in clinical, matter-of-fact terms. Xu, for example, is the first person I’ve ever heard use the term “emotional token.”

“Let’s say we can decode twenty different emotions from you,” Xu said. “Then you can explore a bunch of different interfaces like plugging that into ChatGPT. Now you have an emotional token that you can augment it with. You can have the AI know how you’re feeling and respond to that.”

The reality, however, is that we are very much heading in this direction or at least trying to. Hundreds of millions of dollars have been pumped into BCI technology over the past decade. Many of the implants right now have been aimed at people in dire conditions, helping people suffering from paralysis and ALS, for example, move cursors on their computer screens and communicate. But the end goal for many people in the BCI field is to shift this technology into the consumer realm and find new ways for us all to interact with machines and with each other.

“If you can directly interface with technology and use an affordable and consumer accessible brain computer interface, that might be something that is the next revolution of technology,” Xu said. “It might be a way that is better than typing on a keyboard.”

If you’re not into this, I understand. If you are into this, well, there’s a house in the Inner Richmond where you can live rent free if you’re willing to work on the technology day and night.

Others in BCI Land will no doubt harbor plenty of skepticism as to whether these techniques can end up somewhere useful at all. Companies like Neuralink remain so adamant about being close to the neurons because they see this as the only way to tap into the data they need to make huge leaps forward with BCI devices.

socratica mafia